projects

A growing collection of your cool projects.

work

on-going

LLMs for Reinforcement Learning

Developed ProPS and ProPS+ methodology for generating parameterized RL policies directly from LLMs, based on their capability for linguistic and numerical reasoning, coupled with an iterative refinement process. This process is driven by a closed-loop feedback mechanism that provides the LLM with policy reward data, which, along with semantic and contextual task information, enables effective in-context learning. After evaluating across 15 tasks and comparing them with state-of-the-art RL approaches, we extend the method to enhance the RL optimization capabilities of smaller, open-source LLMs. We are actively fine-tuning models such as Qwen2.5 and Qwen3.0, and our initial experiments with 14B models have shown promising results in generating RL policies of a similar scale.

Relevant Papers

Role of Data and Fine-Tuning on LLM Agents

Curate data recipes for TBench and SWEBench Agent tasks, to evaluate their role on LLM Agents. Image represents an LLM agent working with interactive tasks (taken from AgentBench github repository).

completed

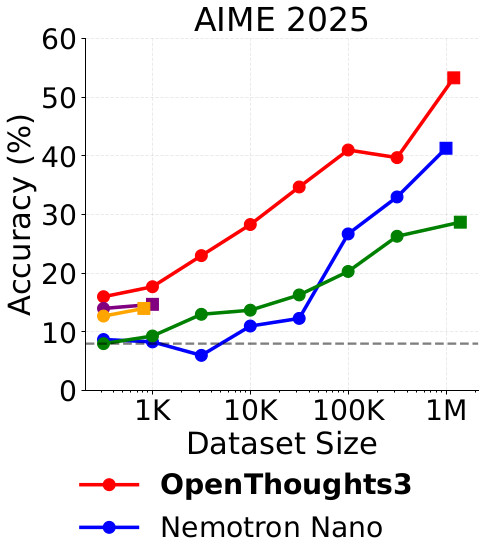

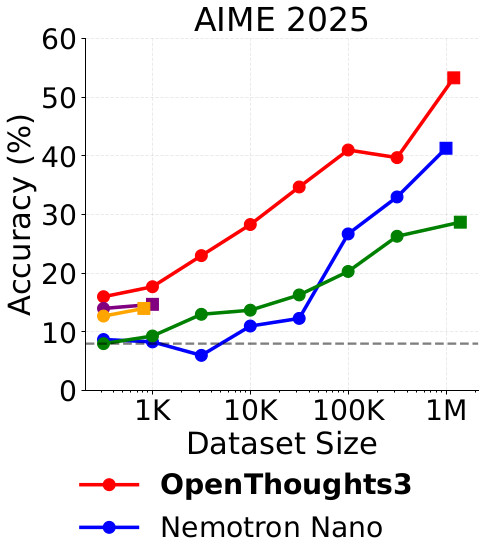

Role of Data in Fine-Tuning LLMs for Reasoning Tasks

Empirically evaluated role of data construction and training recipes for finetuning of LLMs for reasoning tasks. The project created OpenThought finetuned models, whose early versions matched the DeepSeek-R1 performance on AIME and LiveCodeBench etc."

Relevant Papers

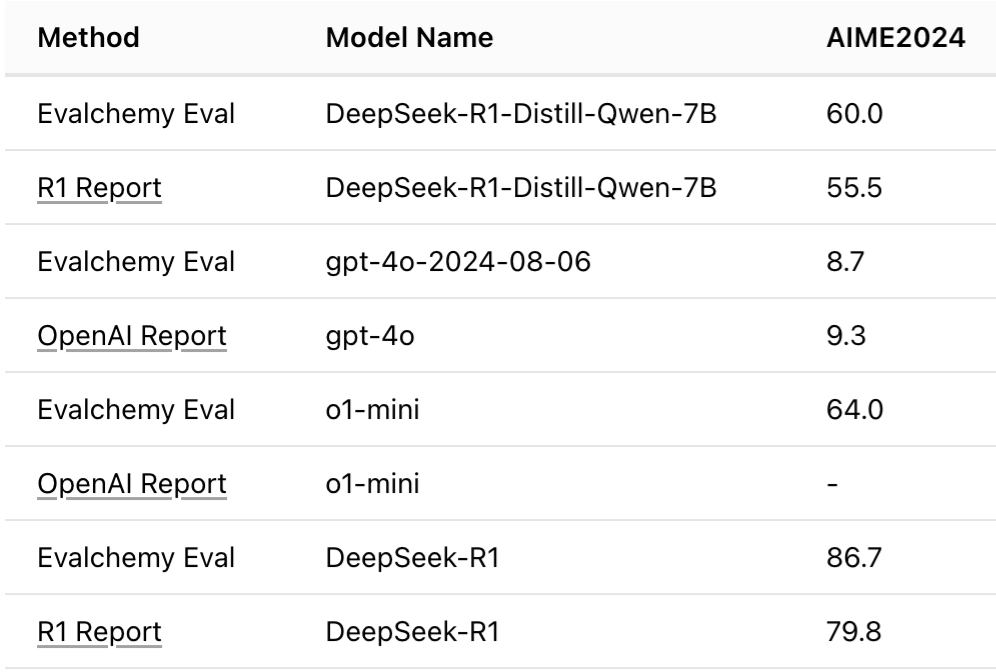

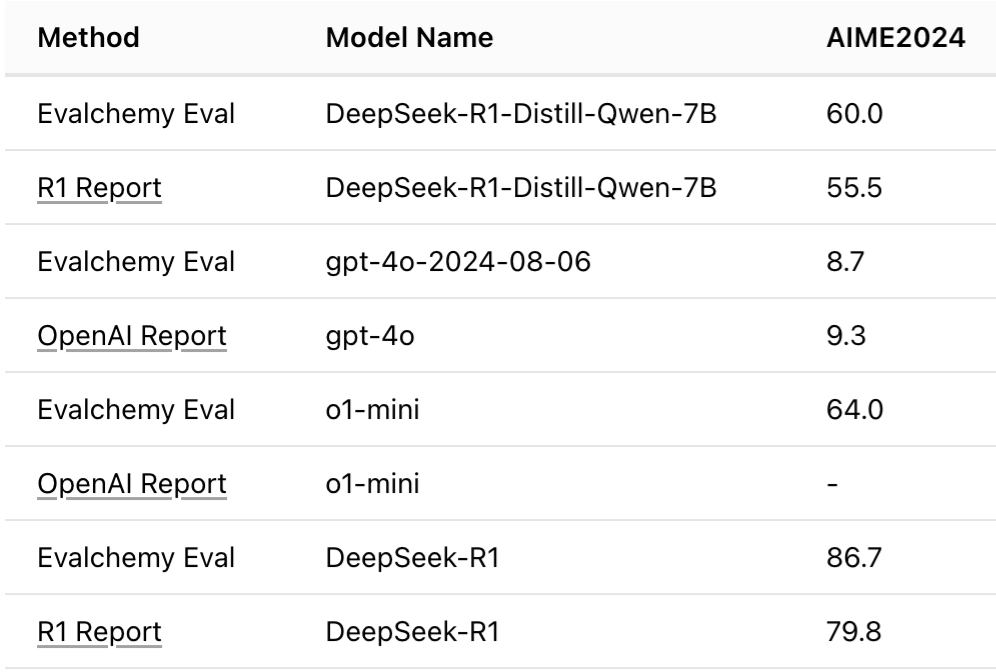

Evalchemy — One-stop Evaluations for LLMs

Designed a one-stop shop for evaluating LLMs with more 30 different benchmarks for several down-stream tasks such as coding, reasoning on maths problems, instruction following etc. It is build on top LM-Eval-Harness.

LLM for Natural Language Understanding - A Demonstration

Developed an end-to-end pipeline using Kitchen Domain in AI2Thor, where a Robot is given commands, and it uses an LLM to parse it to formal language, and the formal structure is converted to a plan using PDDL+ planner.

Relevant Papers

Open-World AI Agent

Designed Hydra framework for domain independent agents working in dynamic environments. Dynamic environment is the one where the assumptions made by the agent during training phase change during the test phase. The agent needs to (1) understand the assumptions, and (2) accomodate them in its own model. We used the PDDL+ to model these environments and incorporate the changes.

This was a DARPA Sail-On project with over 10 teams academic and industry partners, undertaken by PARC, where we were the top performing team. The evaluation was performed by third-party on Angry Birds game, Minecraft based grid environments, and Cartpole 3D versions. We also presented a real-world demonstration where fighter jet trajectories were updated due to changes in the environment.

Relevant Papers

Nyx PDDL+ Planner

PDDL+ is a modeling language that can model discrete/continuous hybrid systems with exogenous events. Nyx is a planner over the most expressive modeling language that uses time discretization defined for the task, and creates a plan executable when the actions can be executed.

Relevant Papers

fun

on-going

LLMs for Reinforcement Learning

Developed ProPS and ProPS+ methodology for generating parameterized RL policies directly from LLMs, based on their capability for linguistic and numerical reasoning, coupled with an iterative refinement process. This process is driven by a closed-loop feedback mechanism that provides the LLM with policy reward data, which, along with semantic and contextual task information, enables effective in-context learning. After evaluating across 15 tasks and comparing them with state-of-the-art RL approaches, we extend the method to enhance the RL optimization capabilities of smaller, open-source LLMs. We are actively fine-tuning models such as Qwen2.5 and Qwen3.0, and our initial experiments with 14B models have shown promising results in generating RL policies of a similar scale.

Relevant Papers

Role of Data and Fine-Tuning on LLM Agents

Curate data recipes for TBench and SWEBench Agent tasks, to evaluate their role on LLM Agents. Image represents an LLM agent working with interactive tasks (taken from AgentBench github repository).

completed

Role of Data in Fine-Tuning LLMs for Reasoning Tasks

Empirically evaluated role of data construction and training recipes for finetuning of LLMs for reasoning tasks. The project created OpenThought finetuned models, whose early versions matched the DeepSeek-R1 performance on AIME and LiveCodeBench etc."

Relevant Papers

Evalchemy — One-stop Evaluations for LLMs

Designed a one-stop shop for evaluating LLMs with more 30 different benchmarks for several down-stream tasks such as coding, reasoning on maths problems, instruction following etc. It is build on top LM-Eval-Harness.

LLM for Natural Language Understanding - A Demonstration

Developed an end-to-end pipeline using Kitchen Domain in AI2Thor, where a Robot is given commands, and it uses an LLM to parse it to formal language, and the formal structure is converted to a plan using PDDL+ planner.

Relevant Papers

Open-World AI Agent

Designed Hydra framework for domain independent agents working in dynamic environments. Dynamic environment is the one where the assumptions made by the agent during training phase change during the test phase. The agent needs to (1) understand the assumptions, and (2) accomodate them in its own model. We used the PDDL+ to model these environments and incorporate the changes.

This was a DARPA Sail-On project with over 10 teams academic and industry partners, undertaken by PARC, where we were the top performing team. The evaluation was performed by third-party on Angry Birds game, Minecraft based grid environments, and Cartpole 3D versions. We also presented a real-world demonstration where fighter jet trajectories were updated due to changes in the environment.

Relevant Papers

Nyx PDDL+ Planner

PDDL+ is a modeling language that can model discrete/continuous hybrid systems with exogenous events. Nyx is a planner over the most expressive modeling language that uses time discretization defined for the task, and creates a plan executable when the actions can be executed.